Introduction

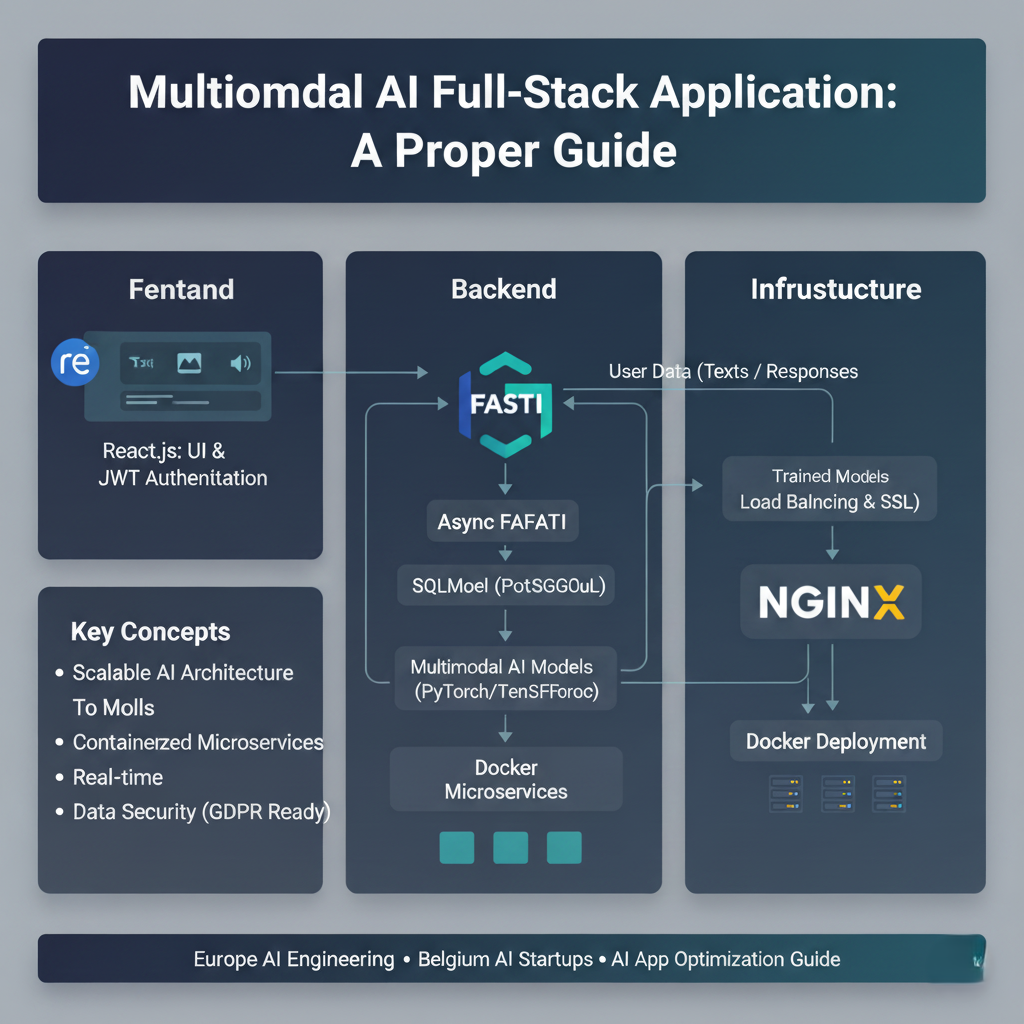

In the era of advanced Artificial Intelligence, where models can not only understand text but also analyze images, audio, and videos, multimodal AI applications have become the next big leap in modern software development. These applications integrate multiple data modalities — text, vision, and sound — to provide a seamless, human-like user experience. From AI assistants that interpret voice and generate responses, to intelligent dashboards that process real-time data streams, multimodal AI systems are redefining how humans interact with technology.

Building such applications, however, requires a deep understanding of full-stack development and AI systems engineering. Many developers face challenges in bridging the gap between machine learning backends and frontend interfaces, especially when scalability, containerization, and security are added to the mix.

If you’re a VibeCoder with less technical knowledge and want to build a full-stack application, then this blog is purely for you. It is written to serve as a step-by-step engineering guide for developers, startups, and AI enthusiasts who want to understand the complete architecture behind deploying an end-to-end multimodal AI solution.

In this comprehensive guide, we will explore — from a production-grade perspective — how to design, build, and deploy a multimodal AI full stack application using an optimized technology stack that includes:

-

FastAPI for building asynchronous, high-performance backend services integrated with AI models.

-

SQLModel (instead of SQLAlchemy) for efficient, Pythonic database modeling with data validation.

-

Docker for containerization, worker orchestration, and efficient deployment pipelines.

-

React for building secure, responsive, and scalable frontend interfaces with hooks and JWT-based authentication.

-

NGINX for SSL certification, load balancing, and traffic management across multiple protocols (HTTP, TCP, UDP).

This guide is not just a tutorial—it’s a complete engineering blueprint that will help you conceptualize and implement a robust, multimodal AI system from backend to frontend. We’ll break down each layer of the stack, focusing on architectural decisions, performance optimization, and integration best practices that align with real-world deployments used in AI startups and enterprise systems across Europe.

By the end of this blog, you will understand:

-

How to architect a FastAPI-based backend for multimodal AI workflows.

-

Why SQLModel offers superior advantages over traditional ORM frameworks like SQLAlchemy.

-

How to efficiently manage Docker containers, workers, and microservices in production.

-

The correct approach to handling authentication, state, and data flow in React using modern Hooks and JWT tokens.

-

How to configure NGINX for SSL termination, load balancing, and secure cross-origin communication.

This isn’t a surface-level overview—it’s a practitioner’s guide aimed at developers who want to build production-ready, scalable, and secure AI-driven applications that handle real-world workloads.

FastAPI – The Core of the Backend

In any modern multimodal AI full stack application, the backend is the central nervous system — it orchestrates data flow, model inference, user management, and inter-service communication. When building an AI-driven platform capable of handling asynchronous requests, multiple microservices, and real-time model inference, choosing the right backend framework is critical.

Among the available options such as Flask, Django, and Node.js, FastAPI stands out as one of the most performant and scalable solutions. It’s designed from the ground up for asynchronous execution, automatic validation, and seamless AI integration, making it ideal for both data-intensive and machine-learning-centric systems.

1. Why FastAPI for Multimodal AI Systems

FastAPI is built on top of Starlette (for async web handling) and Pydantic (for data validation), combining speed and reliability in a single lightweight framework. It’s one of the few Python frameworks that natively supports async I/O, type safety, and automatic OpenAPI documentation, which makes it a perfect fit for AI-oriented workloads.

Key Advantages:

-

Asynchronous processing: Enables concurrent API calls, critical for model inference or multimodal data streams (e.g., image upload + text input).

-

Type-hinted validation: Reduces runtime errors and simplifies large system maintenance.

-

Native JSON serialization: Perfect for multimodal payloads.

-

Easy AI integration: Seamlessly interacts with frameworks like PyTorch, TensorFlow, and Hugging Face Transformers.

-

Automatic docs: Built-in Swagger and Redoc documentation make API testing efficient and transparent.

When building multimodal AI systems, where requests can involve uploading a video, sending textual context, and requesting model inference all at once, asynchronous performance is essential. FastAPI achieves this through Python’s async and await syntax, ensuring non-blocking I/O during long-running operations such as image processing or neural inference.

2. Asynchronous Architecture and Performance Optimization

A common mistake developers make is to treat FastAPI like Flask — using synchronous endpoints for tasks that require concurrency. However, the true power of FastAPI lies in async-driven design.

When handling multimodal workflows, requests may involve multiple sub-tasks such as:

-

Fetching metadata from databases

-

Running model inference

-

Streaming intermediate results to frontend

-

Updating progress through a message broker

In a synchronous setup, these tasks block each other, leading to performance bottlenecks.

In an asynchronous architecture, each task executes concurrently, utilizing event loops efficiently — making FastAPI ideal for real-time AI inference and streaming applications.

Async Design Principles for AI Workloads:

-

Async database calls: Use async drivers such as

asyncpgor SQLModel’s async engine. -

Async model inference: Deploy model inference on worker threads or background tasks to prevent main thread blocking.

-

Task queuing: Use Celery or Redis Queue for heavy multimodal processing pipelines.

-

WebSocket integration: Use

FastAPI WebSocketendpoints to stream AI model responses (e.g., token-by-token generation or image rendering progress).

With these design patterns, FastAPI can scale from small demo APIs to large enterprise-level multimodal services processing thousands of concurrent AI inference requests.

3. Why SQLModel Over SQLAlchemy

While SQLAlchemy is a powerful ORM, it’s often verbose, complex, and requires manual data validation. SQLModel, created by the same developer as FastAPI (Sebastián Ramírez), merges the strengths of Pydantic and SQLAlchemy into a single, modern ORM that fits perfectly into the FastAPI ecosystem.

Advantages of SQLModel:

-

Unified schema definition: One class defines both the ORM table and the Pydantic model.

-

Automatic type validation: Thanks to Pydantic integration, request/response models validate automatically.

-

Simplified queries: Cleaner syntax reduces overhead for new developers.

-

Native async support: Works smoothly with async database engines like PostgreSQL and SQLite.

-

Better maintainability: One model can handle both database persistence and API schema generation.

In a multimodal AI setup, where the backend stores embeddings, logs, and inference metadata, SQLModel simplifies handling complex schemas without compromising performance or readability.

Typical Use Cases in AI Projects:

-

Logging model inference requests and outputs.

-

Storing user profiles, preferences, and feedback loops.

-

Managing AI model metadata (versioning, hyperparameters, checkpoints).

-

Tracking multimodal data (text prompts, uploaded images, or extracted features).

This integration of SQLModel within FastAPI provides both developer efficiency and data consistency, crucial for maintaining reproducible AI experiments and ensuring smooth communication between microservices.

4. Optimizing Backend Logic and AI Integration

When integrating AI components such as Hugging Face Transformers, LangChain, or OpenAI APIs, performance and modularity become key. AI models often have high computational overhead, and without careful optimization, backend responsiveness can degrade significantly.

Strategies for Optimizing Backend Logic:

-

Model Preloading:

Load models once during app startup instead of per-request initialization. This avoids repeated GPU/CPU memory allocations. -

Task Offloading:

Heavy AI operations (e.g., embeddings generation, image-to-text transformation) should run on background workers using Celery or Redis Task Queues. -

Microservices Approach:

Split large AI functionalities (e.g., text generation, image analysis, speech processing) into isolated FastAPI microservices.-

Each service can be containerized independently with Docker.

-

Internal communication can use REST, gRPC, or message brokers.

-

-

Caching Inference Results:

Use Redis or Memcached to cache frequent inference requests. This drastically reduces response latency. -

Batch Processing:

For large datasets or streaming inputs, process multiple samples concurrently to optimize hardware utilization. -

Async File Handling:

For multimodal uploads (images, PDFs, audio), use FastAPI’sUploadFile(which is async by default) to handle I/O efficiently.

By combining these optimization techniques, a FastAPI backend can maintain low latency, high throughput, and fault tolerance — even when integrating resource-intensive AI models.

5. Microservices-Based Architecture

A microservices architecture enhances modularity, scalability, and maintainability — especially in AI-driven ecosystems where each service may handle a specific task (e.g., model serving, data preprocessing, or analytics).

Benefits of a Microservices Design:

-

Fault isolation: One service failure doesn’t bring down the entire system.

-

Independent scaling: Services can scale separately based on workload.

-

Technology flexibility: Each service can use the optimal AI library or database.

-

Continuous deployment: Enables easier CI/CD pipelines and updates.

Common Microservices in a Multimodal AI App:

-

Inference Service: Handles model prediction tasks (e.g., Hugging Face models or custom neural networks).

-

Data Service: Manages structured data storage and retrieval (e.g., SQLModel/PostgreSQL).

-

Auth Service: Manages JWT tokens and user authentication flows.

-

Media Service: Handles file storage (images, audio, videos) via cloud or object stores.

-

Notification Service: Handles real-time updates or WebSocket communication.

FastAPI’s lightweight nature and async design make it a perfect foundation for such modular microservices, each running independently but communicating efficiently.

6. API Gateway and Integration

When managing multiple microservices, it’s important to expose them through a unified API gateway. NGINX or a dedicated FastAPI gateway can aggregate requests, apply authentication, and route traffic to the correct service.

This approach enables:

-

Centralized request validation and security enforcement.

-

Unified logging and rate-limiting mechanisms.

-

Seamless scaling and service discovery.

The backend layer thus becomes a distributed but coordinated ecosystem capable of handling multimodal data flows in a production-grade AI system.

Docker – Efficient Containerization and Worker Management

In modern AI and full-stack application development, Docker is the backbone of deployment and scalability. When building a multimodal AI full-stack application that combines FastAPI microservices, machine learning inference, databases, and frontend clients, containerization is not optional — it’s essential.

Docker allows each part of your system (FastAPI, React, NGINX, Celery, Redis, and AI models) to run in isolated containers, ensuring consistent environments across development, staging, and production. This isolation eliminates the infamous “works on my machine” issue and enables seamless orchestration of microservices for distributed AI systems.

1. Why Docker for Multimodal AI Applications

When building applications that include FastAPI backend, AI model servers, and frontend clients, reproducibility and scalability are critical. Docker provides an easy and efficient way to bundle dependencies, Python environments, libraries, and configurations into containers that behave identically on any machine.

Key Benefits:

-

Reproducibility: Consistent environment across teams and systems.

-

Scalability: Easily spin up multiple instances to handle heavy inference loads.

-

Isolation: Prevents library conflicts between AI models and app dependencies.

-

Portability: Runs seamlessly on local machines, cloud VMs, or Kubernetes clusters.

-

Rapid deployment: Faster CI/CD cycles and automated service provisioning.

In multimodal AI applications, where one microservice might run a text model (like GPT or Falcon), another may handle vision tasks, and another may perform speech processing, Docker provides the perfect isolation boundary for these diverse workloads.

2. Building Containers Efficiently

A poorly optimized Docker image can lead to large build sizes, slow deployments, and resource inefficiencies. Optimizing Docker builds is particularly important in AI systems, where containers may include heavy dependencies such as PyTorch, Transformers, or CUDA.

Best Practices for Efficient Docker Images:

-

Use lightweight base images: Start from minimal images like

python:3.11-sliminstead of full distributions. -

Multi-stage builds: Separate build and runtime environments to reduce image size.

-

Cache dependencies: Install dependencies before copying code to leverage Docker layer caching.

-

Avoid unnecessary packages: Only include the essentials (e.g., model binaries, inference scripts).

-

Leverage

.dockerignore: Exclude logs, temp files, and local datasets to reduce context size. -

Use environment variables: For configuration instead of hardcoded settings, improving portability.

Example Workflow:

-

Container 1: FastAPI service with async APIs and AI inference.

-

Container 2: Celery worker for background processing.

-

Container 3: PostgreSQL database.

-

Container 4: Redis cache.

-

Container 5: NGINX reverse proxy and load balancer.

This modular approach keeps your architecture clean and makes scaling or debugging specific components straightforward.

3. Managing Workers and Background Tasks

AI applications often perform heavy operations — generating embeddings, running inference pipelines, or precomputing datasets — which can block the main API thread if not managed properly. Dockerized workers solve this problem elegantly.

By using Celery workers or FastAPI BackgroundTasks, you can offload long-running AI computations into dedicated worker containers. These containers can:

-

Scale independently based on queue size.

-

Run on separate nodes or GPUs.

-

Be restarted without affecting other services.

Key Worker Management Tips:

-

Use message brokers like Redis or RabbitMQ for task communication.

-

Set concurrency levels based on CPU/GPU resources available.

-

Monitor queues using tools like Flower (for Celery).

-

Deploy dedicated GPU containers for model inference.

This decoupled design ensures high throughput, reduces latency for end-users, and makes the system robust against load spikes — especially important in multimodal inference scenarios where image, text, and video processing may run simultaneously.

4. Container Orchestration and Scalability

Running containers locally is useful for development, but deploying and managing them in production requires container orchestration. This is where Docker Compose and Kubernetes play vital roles.

Using Docker Compose:

-

Define all services (FastAPI, React, Redis, NGINX) in a single

docker-compose.yml. -

Manage inter-service networking automatically.

-

Easily scale services:

docker-compose up --scale fastapi=3 -

Simplify local development by simulating full production stacks.

Using Kubernetes (K8s):

For enterprise-grade scalability and fault tolerance, Kubernetes orchestrates containers across clusters.

Advantages for AI Systems:

-

Automatic scaling (Horizontal Pod Autoscaler).

-

Resource quotas to balance CPU/GPU utilization.

-

Rolling updates with zero downtime.

-

Self-healing and service discovery.

In multimodal AI setups, where you may have GPU-heavy inference services, lightweight FastAPI APIs, and multiple background workers, Kubernetes helps allocate resources dynamically and keep everything running efficiently.

5. Performance Optimization in Docker Environments

Optimizing resource utilization is crucial when containers host compute-heavy AI workloads.

Optimization Techniques:

-

Limit CPU/GPU usage: Assign specific cores or GPUs using Docker runtime flags.

-

Persistent caching: Use volume mounts for caching model weights (avoid reloading on restart).

-

Efficient networking: Use shared Docker networks for faster inter-service communication.

-

Log management: Route logs to centralized services to prevent container bloat.

-

Health checks: Define container-level health checks for proactive failure recovery.

These strategies ensure that your AI application remains fast, stable, and scalable across environments — whether deployed on a single VM or distributed cluster.

6. Continuous Integration and Deployment (CI/CD)

Automation is vital for modern full-stack AI pipelines. Once Dockerized, your application can easily be integrated with CI/CD tools like GitHub Actions, GitLab CI, or Jenkins to automate testing, building, and deployment.

CI/CD Workflow Overview:

-

Build phase: Automatically build Docker images on code commit.

-

Test phase: Run unit and integration tests inside containers.

-

Deploy phase: Push production-ready images to container registry (e.g., Docker Hub, AWS ECR).

-

Deploy orchestration: Automatically update services on production servers or Kubernetes clusters.

This ensures consistent, repeatable, and secure deployments for AI-powered applications — a must-have for any team delivering real-world multimodal systems.

7. Docker Networking and Security

As applications grow, internal communication between containers (e.g., FastAPI <-> NGINX <-> React <-> Redis) becomes more complex. Docker provides a virtual network layer to manage these interactions securely.

Best Practices:

-

Use internal networks for service-to-service communication (not exposed to the internet).

-

Limit public ports — only expose what’s necessary (e.g., NGINX proxy).

-

Use SSL/TLS certificates for encrypted API communication.

-

Isolate GPU containers if they handle sensitive data.

In combination with NGINX, you can enforce secure cross-origin communication, manage load balancing, and handle SSL offloading — all within a containerized infrastructure.

Docker is thus not just a deployment tool — it’s the foundation for efficient AI infrastructure management, ensuring every microservice, model, and frontend component runs in harmony.

React – Building a Robust Frontend for Multimodal AI Systems

In any multimodal AI full-stack application, the frontend is the user’s gateway to complex machine learning functionalities. Whether it’s a dashboard displaying model analytics, a chat interface interacting with a language model, or an image-to-video conversion tool — React provides the flexibility, performance, and modularity required to build seamless user experiences.

React, powered by hooks, context, and component-based architecture, is not only scalable but also highly compatible with backends like FastAPI through well-structured REST or WebSocket APIs. When combined with JWT-based authentication and real-time communication, React becomes a perfect frontend framework for AI-driven systems.

1. Why Choose React for Multimodal AI Applications

React’s virtual DOM, declarative syntax, and large ecosystem make it ideal for AI-integrated applications that must handle real-time updates and rich multimedia.

Core Advantages:

-

Dynamic rendering: Perfect for continuously changing inference outputs.

-

Component reusability: Ideal for modular dashboards, model controls, and media viewers.

-

Efficient state management: With hooks and context, managing async inference responses becomes simpler.

-

Integration-ready: Easily communicates with FastAPI’s REST or WebSocket endpoints.

-

SEO and performance optimization: With Next.js, React supports server-side rendering and faster page loads.

In multimodal systems, users often upload files, trigger model inference, and visualize results (e.g., AI-generated videos or embeddings). React can handle these interactions fluidly, ensuring minimal latency and maximum interactivity.

2. Using React Hooks for AI Workflows

Hooks are the backbone of modern React. They allow stateful logic to be reused across components without class structures — which is critical in complex AI-driven UIs.

Essential Hooks in Multimodal Apps:

-

useState: Manage prompt inputs, API responses, and model states.

-

useEffect: Handle async inference calls or stream updates when a state changes.

-

useContext: Maintain global state for authentication or active user sessions.

-

useReducer: Manage complex state transitions during model processing (e.g., queued → processing → completed).

-

useRef: Handle media elements like video preview or audio playback during AI model output rendering.

These hooks, when properly structured, ensure your app remains responsive and intuitive even when interacting with multiple backend services simultaneously.

3. JWT Authentication and Secure API Handling

Security is paramount in AI platforms, especially when handling user-specific data or confidential models. JWT (JSON Web Tokens) is a lightweight and secure method for maintaining authenticated sessions between React and FastAPI.

Typical Flow:

-

User logs in via React → sends credentials to FastAPI.

-

FastAPI validates and returns a signed JWT token.

-

React stores the token (typically in memory or HTTP-only cookies).

-

Every subsequent API call includes the token in the Authorization header.

Authorization: Bearer <jwt_token>

React intercepts all outgoing API requests, attaches the JWT automatically, and handles refresh logic when tokens expire. This ensures users maintain authenticated access to AI inference endpoints and protected routes, such as model dashboards or analytics panels.

Key JWT Practices:

-

Never store tokens in localStorage (use memory or secure cookies).

-

Use interceptors in Axios or Fetch API for token injection.

-

Protect sensitive inference routes in FastAPI with JWT middleware.

-

Implement token expiration and refresh flows.

With secure token handling, your application is protected from unauthorized access while maintaining a smooth, uninterrupted user experience.

4. Structuring API Calls Properly

Properly organized API handling is essential for clean and maintainable frontend architecture. In a multimodal AI setup, React often communicates with multiple microservices: inference servers, data APIs, authentication services, and media endpoints.

Best Practices:

-

Centralized API handler: Create a unified module for all REST calls.

-

Error boundaries: Capture and handle backend failures gracefully.

-

Async/await pattern: Use promises for clean async logic.

-

Retry logic: Re-attempt failed AI inference calls automatically with exponential backoff.

-

WebSocket listeners: For live updates from streaming models or backend workers.

This approach ensures your React app can manage complex API workflows while keeping the codebase clean, readable, and easy to debug.

5. Managing AI Inference States and User Feedback

A critical part of building AI frontends is giving users clear feedback during model operations. Multimodal inference can take several seconds — image generation, video synthesis, or large language responses all involve latency.

UI State Strategy:

-

Idle State: No request in progress.

-

Loading State: Model processing; show progress bar or spinner.

-

Success State: Display model output dynamically (text, image, or video).

-

Error State: Handle backend or network errors gracefully.

By structuring UI states in this manner, users always know what’s happening in the system — improving trust and experience.

6. Frontend Optimization Techniques

For multimodal AI applications, performance optimization ensures that rich visuals, large datasets, and complex interactions remain smooth even on client-side hardware.

Optimization Strategies:

-

Code-splitting and lazy loading: Load model dashboards or analytics components only when needed.

-

Memoization with useMemo/useCallback: Prevent redundant re-renders in state-heavy components.

-

Compression: Use gzip or Brotli for large JSON payloads.

-

CDN integration: Serve static assets like model preview videos or thumbnails from CDNs.

-

Image caching: Cache AI-generated media to improve subsequent load times.

-

Pagination and infinite scroll: For large datasets or inference histories.

These techniques maintain responsiveness, even when handling large multimodal responses like AI-generated video previews or embeddings visualizations.

7. Frontend Scalability and Integration with Backend

React’s modular design fits perfectly with a microservice backend. Each module (e.g., “Text Analysis,” “Image Processing,” or “Video Synthesis”) can communicate with its respective FastAPI microservice.

This loosely coupled integration allows teams to:

-

Update frontend modules without backend redeployment.

-

Deploy new AI features incrementally.

-

Connect to different inference APIs dynamically.

-

Scale frontend servers independently for high-traffic scenarios.

React’s flexibility combined with FastAPI’s asynchronous endpoints ensures your multimodal application remains highly scalable, maintainable, and ready for enterprise-level deployment.

8. Accessibility and Usability in AI Interfaces

Accessibility should not be overlooked — especially in AI systems that cater to users across industries. Ensure that:

-

Inputs and outputs are clearly labeled.

-

Color contrasts meet WCAG standards.

-

Components are keyboard-navigable.

-

Live regions announce AI status updates (e.g., “Model is generating output...”).

Inclusive design principles improve usability and make AI tools more approachable to non-technical audiences — crucial for products aimed at professionals, educators, or researchers.

React thus forms the interactive core of the multimodal AI experience — providing real-time responsiveness, secure API integration, and modular scalability.

NGINX – SSL, Cross-Origin Handling, and Load Balancing for AI Microservices

When developing a multimodal AI full-stack application, one of the most overlooked yet critical components of the system is NGINX. Acting as the reverse proxy, load balancer, and security gateway, NGINX ensures that your application’s multiple moving parts — FastAPI services, React frontend, Docker containers, and AI inference microservices — communicate efficiently, securely, and reliably.

For high-performance AI systems, especially those integrating asynchronous requests, real-time inference, and large file uploads (like images or videos), NGINX plays a pivotal role in ensuring smooth traffic flow, cross-origin safety, and SSL encryption.

1. The Role of NGINX in AI Architectures

In a multimodal AI ecosystem, multiple backend services coexist: FastAPI for core logic, Celery for background tasks, Redis for caching, React for UI, and Docker to encapsulate them. NGINX acts as the orchestrator at the networking layer, routing requests intelligently and ensuring optimal performance across the stack.

Core Responsibilities of NGINX:

-

Reverse proxying: Routes client requests to specific backend services.

-

Load balancing: Distributes incoming traffic among multiple backend instances.

-

SSL termination: Manages HTTPS connections and encryption.

-

CORS (Cross-Origin Resource Sharing): Handles cross-domain requests between frontend and backend.

-

Traffic control: Manages concurrent requests and optimizes throughput.

-

Static file serving: Efficiently serves React builds, logs, and model outputs.

Without NGINX, your AI stack would face challenges like connection bottlenecks, CORS errors, or uneven traffic distribution — all of which degrade user experience and system stability.

2. Implementing SSL Certificates for Secure Communication

Security is paramount in AI applications, especially when users exchange sensitive data such as authentication tokens, model outputs, or media files. SSL/TLS certificates ensure that all data between your frontend and backend remains encrypted.

Steps to Enable SSL:

-

Obtain certificates using Let’s Encrypt or a trusted CA.

-

Configure NGINX to use the certificate and private key.

-

Redirect all HTTP traffic to HTTPS.

-

Apply strong cipher suites for added protection.

Key Benefits:

-

Prevents man-in-the-middle attacks.

-

Ensures data confidentiality and integrity.

-

Improves SEO rankings (Google prioritizes HTTPS sites).

-

Enables secure WebSocket connections for live AI responses.

For AI microservices deployed within Docker containers, SSL can be terminated at the NGINX proxy layer — simplifying configuration inside containers and maintaining centralized control.

3. Cross-Origin Handling Using CORS and CBOR

Cross-Origin Resource Sharing (CORS) is a critical concern when your frontend (React) and backend (FastAPI) are hosted on different domains or ports. Improper configuration can block legitimate requests, especially when handling file uploads or WebSocket connections.

Best Practices for Cross-Origin Setup:

-

Allow specific origins (e.g., your domain or staging environment).

-

Enable CORS headers for only necessary HTTP methods (

GET,POST,OPTIONS). -

Restrict credentials and headers to prevent unauthorized access.

-

Handle preflight requests efficiently to reduce latency.

Using CBOR (Cross-Origin Binary Object Request):

CBOR provides a compact binary format for data exchange, making it highly efficient for AI inference results — especially when transmitting image tensors, embeddings, or large structured JSON.

When integrated with NGINX and FastAPI, CBOR reduces payload sizes and enhances performance for real-time inference or multimodal streaming.

4. Load Balancing Across AI Microservices

One of NGINX’s most powerful features is load balancing — essential for high-performance AI systems where multiple backend instances must share computational load.

Load Balancing Methods:

-

Round Robin: Default; distributes requests evenly among servers.

-

Least Connections: Routes traffic to the server with the fewest active connections.

-

IP Hash: Maintains client session consistency by binding requests to specific instances.

-

Hash-based: Custom strategy (e.g., distribute based on model type or task ID).

For AI workloads, this ensures balanced GPU utilization and prevents overloading specific servers. You can even dedicate specific instances to different model types — for example:

-

Node 1: Handles LLM (text) requests.

-

Node 2: Handles image/video inference.

-

Node 3: Handles embedding/vector retrieval.

NGINX automatically manages routing and failover, keeping the entire ecosystem robust.

5. Traffic Management and ReusePort Optimization

Handling large-scale concurrent AI requests requires efficient resource allocation. This is where NGINX’s reuseport directive becomes invaluable.

It allows multiple worker processes to listen on the same port, distributing incoming connections evenly at the kernel level — significantly improving throughput and latency under heavy load.

Traffic Optimization Techniques:

-

Enable

reuseport: Maximizes CPU core utilization. -

Tune worker processes: Match the number of NGINX workers to available CPU cores.

-

Connection keep-alive: Reuse existing TCP connections to reduce handshake overhead.

-

Enable HTTP/2: For multiplexed connections and improved concurrency.

-

Implement caching: Cache static responses like AI model metadata or analytics dashboards.

For multimodal AI applications with video processing or streaming AI outputs, these optimizations prevent service lag and ensure stable, real-time performance.

6. Protocol-Level Load Balancing (HTTP, TCP, UDP)

AI systems often communicate across different protocols — REST (HTTP), WebSockets (TCP), and sometimes UDP (for lightweight inference pings or metrics).

NGINX supports multi-protocol load balancing natively, which is essential when your system handles:

-

HTTP traffic: Standard web API requests from React or external clients.

-

TCP connections: Persistent sessions for WebSockets or model streaming.

-

UDP datagrams: Fast, connectionless tasks like telemetry or AI status broadcasting.

This unified approach ensures that all communication layers — from real-time inference streaming to monitoring signals — remain synchronized and reliable.

7. Advanced NGINX Configurations for AI Applications

To handle the complexity of full-stack AI infrastructures, NGINX can be fine-tuned with the following features:

Caching Layers:

Cache frequently accessed AI results or API metadata to reduce backend load.

Rate Limiting:

Prevent abuse or excessive inference requests per user/IP.

Compression:

Enable gzip/Brotli compression for AI responses, reducing payload size.

WebSocket Proxying:

Allow seamless real-time communication between AI models and React UI (for token-by-token text generation or video synthesis updates).

Static Content Optimization:

Serve AI-generated files (e.g., video previews, charts, JSON reports) directly from the proxy layer for reduced backend overhead.

These configurations not only enhance performance but also provide an additional security layer for microservices — essential when deploying on the cloud or public servers.

8. Monitoring and Observability

NGINX also supports advanced logging and integration with monitoring tools such as Prometheus, Grafana, and Elastic Stack (ELK). This allows developers to visualize traffic metrics, detect bottlenecks, and forecast system performance.

Metrics to Track:

-

Request latency and throughput.

-

Error rates per endpoint.

-

Cache hit/miss ratios.

-

SSL handshake times.

-

CPU/memory utilization across containers.

In AI systems with multiple moving parts, observability ensures proactive management — identifying issues before they impact end-users.

9. Combining NGINX with Docker and FastAPI

When deployed in a containerized environment, NGINX becomes the front-facing entry point of the system. In a typical architecture:

-

NGINX container: Handles routing, SSL, and load balancing.

-

FastAPI containers: Serve inference APIs.

-

React container: Delivers frontend assets.

-

Redis & Celery: Manage queues and caching.

This containerized orchestration, often managed via Docker Compose or Kubernetes, allows independent scaling, zero-downtime updates, and modular development — ideal for enterprise-grade multimodal AI platforms.

NGINX thus acts as the performance backbone of the AI infrastructure, providing secure, reliable, and high-speed routing between all services in your stack ensuring that users experience real-time interaction with complex multimodal AI capabilities.

Conclusion – Building a Scalable Multimodal AI-Powered Application

Building a multimodal AI full-stack application is a complex yet rewarding endeavor that combines intelligent system design, optimized infrastructure, and robust front-end engineering. Throughout this guide, we’ve explored the full lifecycle — from FastAPI as an asynchronous backend and SQLModel for efficient data management, to Dockerized microservices, a responsive React frontend, and NGINX as a secure, high-performance gateway.

This integrated architecture forms the blueprint for scalable, production-grade AI ecosystems that can handle multiple modalities — text, images, video, and audio — in real time.

1. Recap of the Architecture

A properly designed multimodal AI stack has several distinct layers, each optimized for a specific function yet interconnected seamlessly through APIs and container orchestration.

FastAPI (Backend Core):

-

Asynchronous, type-safe, and ideal for concurrent AI inferences.

-

Integrates naturally with machine learning models (Hugging Face, OpenAI, LangChain).

-

Works efficiently with SQLModel, providing a unified ORM and validation layer.

-

Powers microservices that handle text, image, or video AI pipelines independently.

Docker (Infrastructure Layer):

-

Ensures environment consistency and modular scalability.

-

Allows independent deployment of each microservice — FastAPI, Redis, Celery, React, NGINX.

-

Enables GPU containerization for deep learning workloads.

-

Simplifies orchestration with Compose or Kubernetes for distributed AI clusters.

React (Frontend Layer):

-

Delivers dynamic and responsive user interfaces.

-

Uses hooks and context for real-time inference updates.

-

Manages authentication securely with JWT.

-

Communicates efficiently with asynchronous FastAPI endpoints.

NGINX (Gateway and Proxy Layer):

-

Routes traffic between frontend and backend securely using SSL.

-

Handles load balancing for high-concurrency inference workloads.

-

Implements CORS, compression, caching, and protocol-level optimization.

-

Supports HTTP, TCP, and WebSocket protocols for multimodal streaming.

Each layer is essential; together, they ensure a high-performance ecosystem capable of supporting thousands of requests per second while maintaining security, efficiency, and flexibility.

2. The Importance of Asynchronous Design

Modern multimodal AI applications must process large volumes of data and simultaneous user requests — asynchronous programming makes this possible.

FastAPI’s non-blocking architecture, paired with background workers and WebSockets, ensures:

-

High throughput under load.

-

Real-time streaming of AI responses (e.g., text generation or image synthesis).

-

Minimal resource contention between tasks.

-

Scalability across distributed inference endpoints.

This async-first design enables applications to serve interactive, intelligent experiences — such as conversational agents, multimodal dashboards, and real-time video generation tools — without compromising responsiveness.

3. The Role of Microservices in AI Scalability

The microservices architecture transforms how AI applications scale and evolve. Instead of a monolithic backend, microservices allow developers to isolate and optimize each component independently.

Advantages in AI Workflows:

-

Independent scaling: Scale GPU-heavy model inference separately from API servers.

-

Fault isolation: Service failures do not affect the overall application.

-

Technology flexibility: Use specialized frameworks for each model type (e.g., TensorFlow for image tasks, PyTorch for NLP).

-

Rapid iteration: Deploy new models or features without downtime.

In practice, you might have one service for text summarization, another for image captioning, and another for video synthesis — all communicating through REST or message queues, orchestrated by NGINX and Docker.

4. Deployment, CI/CD, and Observability

To move from prototype to production, the system must be deployable, observable, and maintainable. Docker simplifies deployments, while CI/CD pipelines ensure continuous improvement and reliability.

Best Practices:

-

Automate builds and tests using GitHub Actions or GitLab CI.

-

Push images to registries (Docker Hub, AWS ECR, or GCP Artifact Registry).

-

Deploy via Kubernetes for load balancing, self-healing, and automatic scaling.

-

Integrate monitoring through Prometheus, Grafana, or ELK Stack to visualize performance and detect anomalies.

This continuous feedback loop ensures that both application and infrastructure remain resilient under real-world AI workloads.

5. Optimizing for Performance and Cost

Multimodal AI systems can be computationally expensive. Optimization at every layer can drastically reduce latency and infrastructure costs.

Key Strategies:

-

Use model quantization (LoRA/QLoRA) for faster inference.

-

Cache embeddings and frequent queries using Redis.

-

Implement request throttling to prevent API abuse.

-

Apply GPU resource scheduling in Docker and Kubernetes.

-

Deploy models close to users via edge servers for minimal latency.

These optimizations ensure you get enterprise-grade performance even on limited infrastructure, which is especially beneficial for startups or research teams operating with budget constraints.

6. Real-World Use Cases of Multimodal AI Full-Stack Applications

Modern businesses across Europe — especially in Belgium, Germany, France, and the Netherlands — are rapidly adopting AI-based platforms for both internal automation and customer engagement.

Use Case Examples:

-

Retail & E-commerce: AI-driven virtual stores with real-time product visualization.

-

Healthcare: Multimodal diagnostic platforms combining imaging, text, and sensor data.

-

Education: Interactive learning tools with AI tutors, text-to-speech, and vision analysis.

-

Industrial AI: Predictive maintenance using IoT sensor data visualized via React dashboards.

-

Creative industries: AI content generation for marketing, music, and design using multimodal inputs.

Each use case demonstrates how combining FastAPI’s backend intelligence with React’s dynamic UI and NGINX’s traffic management creates reliable, enterprise-ready AI ecosystems.

7. Future-Proofing Your AI System

AI technology evolves rapidly, with new frameworks and tools emerging monthly — from LangGraph and LangChain to Hugging Face Transformers and OpenAI Assistants API.

By designing your system with microservices, Docker, and standardized APIs, you future-proof your infrastructure to integrate new models and workflows seamlessly.

Key steps to future-proof your system:

-

Adopt modular design principles.

-

Use container orchestration for flexibility.

-

Implement versioned APIs for model upgrades.

-

Integrate vector databases (like FAISS, Pinecone, or Milvus) for RAG-based AI agents.

-

Maintain CI/CD-driven evolution to keep the stack current and secure.

This approach ensures your application remains competitive, adaptable, and ready to incorporate emerging multimodal AI capabilities — such as generative video, audio synthesis, and embodied AI.

8. Building for Real Impact

Building a full-stack multimodal AI system isn’t just about technology — it’s about creating intelligent, human-centric experiences that deliver measurable value.

When done right, your system becomes a bridge between human creativity and machine intelligence — capable of enhancing decision-making, accelerating workflows, and generating new insights from multimodal data streams.

Whether you’re a startup founder, data scientist, or enterprise engineer, mastering this stack gives you the ability to deploy cutting-edge AI solutions that are not only innovative but also robust, secure, and production-ready.

9. Final Thoughts

The convergence of FastAPI, React, Docker, and NGINX represents the most efficient and scalable pathway for building multimodal AI applications today.

Each component contributes a vital layer backend intelligence, containerized isolation, frontend interactivity, and network reliability resulting in a cohesive system capable of powering next-generation AI services.

If you’re a vibecoder, a curious creator, or an emerging AI developer with limited technical expertise but a strong vision this architectural guide gives you the roadmap to bring your multimodal AI ideas to life.

10. Call to Action

If you want to build an AI-powered full-stack application, whether it’s a multimodal agent, intelligent automation system, or enterprise-grade AI platform contact us for a quote.

I specialize in crafting AI-driven, high-performance, and production-ready applications, built using modern tools like FastAPI, Docker, React, and NGINX, optimized for scalability, security, and future growth.