Introduction

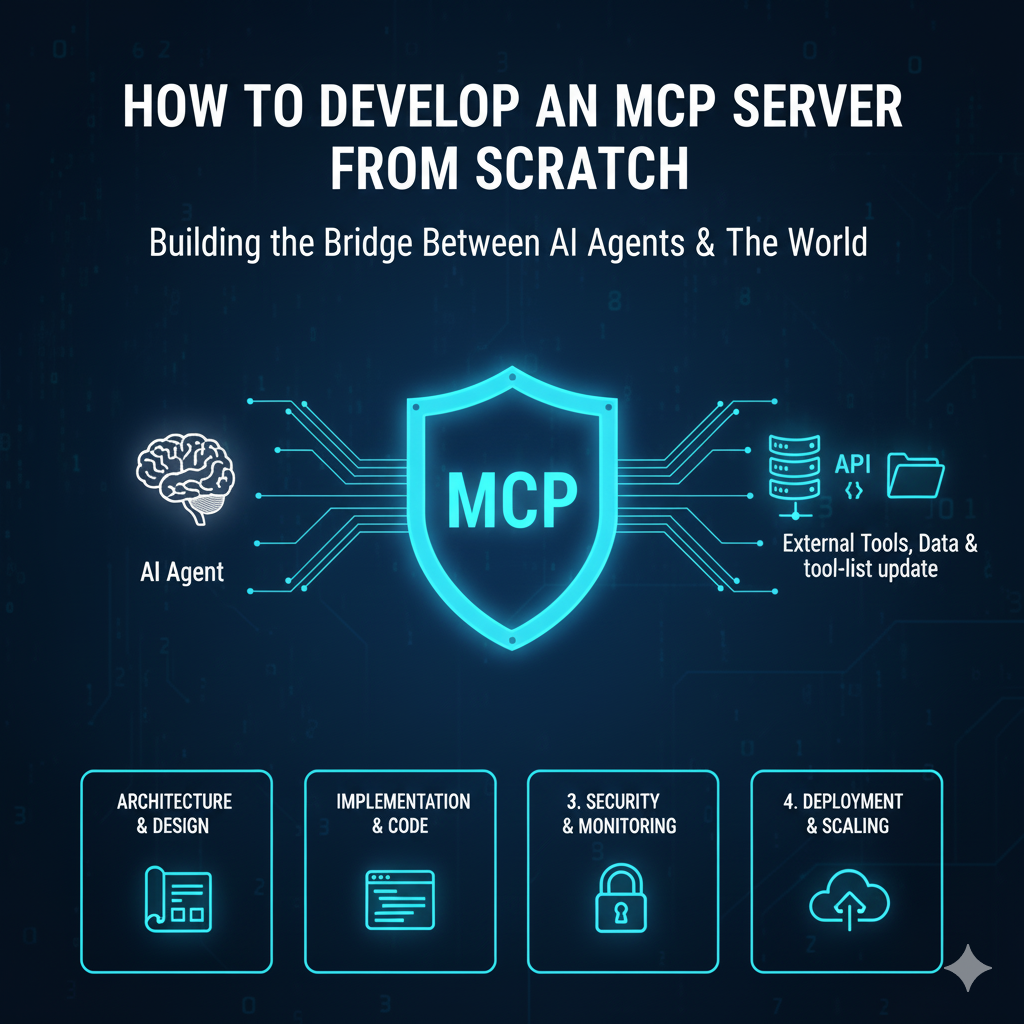

The Model Context Protocol (MCP) is fast becoming a foundational standard in the AI / agent ecosystem. It defines a uniform way for large language models (LLMs) or AI agents to access external tools, resources, and prompts via a well-defined protocol. MCP servers act as bridges between agents and the outside world, exposing context in a secure and structured manner.

If you’re building agentic systems (e.g. chatbot + code execution + data querying), implementing your own MCP server gives you full control over which tools are exposed, how security is enforced, how state is tracked, and how context flows. In this article, we’ll walk you through the full process:

-

A quick MCP primer (concepts, transports, primitives)

-

Architectural design choices (stateful vs stateless, transport modes, tool structure)

-

Step-by-step implementation plan

-

Example code sketches

-

Security, monitoring, scaling, and maintenance

-

Common pitfalls and mitigations

-

Next steps

Let’s dive in.

1. MCP Primer: Concepts, Architecture & Spec

Before building, you must understand what MCP servers are meant to do, and how clients/servers interact under the specification.

1.1 What is MCP?

-

MCP (Model Context Protocol) is an open protocol that standardizes how AI applications (hosts or clients) connect with external data sources, tools, and contexts.

-

Think of MCP as the “USB-C port for AI applications”: instead of hand-coding each model ↔ tool integration, MCP gives a common interface.

-

The protocol uses JSON-RPC 2.0 as its messaging layer.

1.2 Key Primitives / Components

According to the MCP specification (2025 revision) and server documentation, an MCP server supports three core primitives: tools, resources, and prompts.

| Primitive | Purpose | Example |

|---|---|---|

| Tools | Executable interfaces (functions, API endpoints) that the agent can call. | “search_web(query)”, “run_calculation(expr)”, “send_email(to, body)” |

| Resources | Contextual data or structured content the model can query, e.g. documents, databases, JSON blobs. | A knowledge base, file store, or vector DB |

| Prompts | Prewritten templates or instructions that the client can ask server to supply (with parameterization) | “You are assistant with domain X: get context, etc.” |

The MCP server must respond to client messages like list_tools(), call_tool(tool_name, args), get_resource(), list_prompts(), etc.

1.3 Transport / Communication Modes

The MCP spec supports several transport types between client and server.

-

STDIO / Subprocess: The MCP server runs as a local subprocess; communication is over stdin/stdout pipes. Useful for embedding in same host.

-

HTTP + SSE (Server-Sent Events): The server is reachable over HTTP; initial request opens SSE, allowing streaming or push.

-

Streamable HTTP: More modern transport mode allowing clients to receive asynchronous messages (progress, tool results, subscription updates) via HTTP streaming.

Which transport to choose depends on your latency needs, environment, and whether the server will push unsolicited messages (tool updates, notifications).

1.4 Statefulness vs Statelessness

-

Stateless server: each request is independent; no persistent session context. Easier to scale horizontally.

-

Stateful server: server tracks session IDs (

mcp-session-id) or conversational context so that clients can rely on follow-ups or tool chaining over multiple steps. The spec allows both modes.

If you plan multi-step workflows or server-initiated messages, you might need stateful design.

1.5 Security, Authorization & Latest Spec Updates

-

MCP servers are now considered OAuth Resource Servers, requiring clients to use scoped access tokens and resource indicators to avoid token misuse.

-

You must implement tool-level permissions, rate-limiting, input validation, and session timeouts to prevent abuse.

-

Recent safety audits have exposed risks like cross-tool exfiltration, tool poisoning, and malicious server behavior.

Given this foundation, let’s plan your own MCP server.

2. Architectural Design: Planning Your MCP Server

Before writing code, make high-level design decisions:

2.1 Choose Transport & Interaction Mode

-

If your server will be co-deployed with the client (e.g. part of a desktop agent), consider STDIO mode.

-

For networked agents (web clients, distributed systems), prefer Streamable HTTP or HTTP + SSE.

-

If you need streaming tool responses or push notifications (tool list changes), pick Streamable HTTP.

2.2 Choose Session Strategy

-

Stateless: simpler, good for many tool calls that are independent

-

Stateful: needed if tools have side-effects or rely on multi-step context

If stateful, you’ll need session store (Redis, in-memory cache, DB) keyed by session-id.

2.3 Define Tool Interfaces and Contracts

List the tools you need to expose. For each tool:

-

Name / identifier

-

Input schema (types, required vs optional)

-

Output schema

-

Side-effect policy (does this tool modify state or external systems?)

-

Permission level / roles

Also define resource endpoints and prompt templates if needed.

2.4 Tool Filtering & Capability Management

You’ll likely want the ability to:

-

Filter tools per client or session

-

Dynamically enable/disable tools

-

Version tools over time

2.5 Observability, Logging & Metrics

Include monitoring for:

-

list_toolscalls -

call_toollatency, success/fail count -

Session count, memory usage

-

Security events, invalid calls

2.6 Scalability & Deployment

-

If stateless, you can horizontally scale multiple server instances behind load balancers.

-

For stateful, shard sessions or use sticky sessions / shared session store.

-

Consider health checks, circuit breakers for tool failures, retry logic.

3. Step-by-Step Implementation Plan

Here’s an ordered plan to build your MCP server from scratch.

3.1 Initialize Project & Dependencies

Pick your language/framework (Python / Node.js / Go / TypeScript). For this example, we’ll assume Python + FastAPI + async approach.

Stack suggestion:

-

FastAPI (or Starlette) for HTTP

-

uvicornserver -

JSON-RPC 2.0 handling (use

jsonrpcserveror custom) -

Async patterns for streaming

-

Optional session store (Redis)

3.2 Define MCP Message Schema & RPC Handlers

You need RPC methods like:

-

initialize(params…) -

list_tools() -

call_tool(tool_name, params) -

get_resource(name, params) -

list_prompts() -

get_prompt(name, params) -

subscribe_notifications()(if supporting push)

You may reuse or base on the spec definitions from the MCP spec.

3.3 Implement Tool Registry & Execution Engine

-

Create a registry that maps tool names to Python callables (or async functions).

-

Implement code to validate input schema and convert JSON params to typed arguments.

-

When

call_toolis invoked, dispatch to the registered function, capture results or exceptions, and return structured JSON. -

Optionally wrap tool calls in sandboxes or timeouts for security.

3.4 Resource & Prompt APIs

-

get_resourcereturns structured content (e.g. JSON documents). -

list_prompts/get_promptreturn predefined instruction templates.

You can store these in JSON files, DB, or in code depending on complexity.

3.5 Streaming / Long-Running Tool Support

If a tool takes a long time (e.g. web crawling, large model inference), use streaming:

-

For Request-Scoped Streams, open a streaming HTTP response and push partial results as they come.

-

The client should support streaming updates for

call_tool(progress, interim results).

Ensure you follow Flow control (client expects partial JSON chunks, etc.).

3.6 Session & State Handling (if stateful)

-

Maintain a session context (in memory or Redis) keyed by

mcp-session-id. -

Store context, intermediate tool outputs, prompts, etc.

-

Tie repeated tool calls as part of a session.

3.7 Authorization & Security

-

Require client to present an access token (Bearer token or JWT) on each request.

-

Validate token, scope, and resource indicator (new spec requires resource indicators to avoid token misuse) Auth0

-

On tool registration, enforce per-tool permission (who can call which tool).

-

Sanitize input to avoid injection or path traversal.

-

Timeout or cancel long-running function calls if they take too long.

-

Log malicious or invalid requests.

3.8 Tool Filtering / Dynamic Capabilities

You may allow clients to see only a subset of tools:

-

Per-session tool filter (based on client identity)

-

Dynamic enable/disable flags

3.9 Testing & Validation

-

Unit test each RPC method, including edge cases, erroneous input, and exception handling.

-

Use a reference MCP client (SDK) to connect, list tools, make calls, simulate streaming.

-

Test concurrent sessions, tool invocation under load.

3.10 Deploy & Monitor

-

Dockerize your server.

-

Use load balancer or API gateway.

-

Add health/liveness endpoints.

-

Capture metrics (Prometheus / OpenTelemetry).

-

Plan for rolling restarts, session persistence if needed.

4. Sample Code Sketch (Python / FastAPI)

Below is a simplified code sketch—not production-grade, but enough to illustrate architecture.

# mcp_server.py

from fastapi import FastAPI, Request, HTTPException

from fastapi.responses import StreamingResponse

import asyncio

import json

from typing import Any, Dict, Callable

app = FastAPI()

# A simple registry of tools

tool_registry: Dict[str, Callable[..., Any]] = {}

def register_tool(name: str):

def decorator(fn):

tool_registry[name] = fn

return fn

return decorator

@register_tool("echo")

async def tool_echo(params: Dict[str, Any]):

message = params.get("message", "")

await asyncio.sleep(0.1)

return {"echo": message}

@register_tool("add")

async def tool_add(params: Dict[str, Any]):

a = params.get("a", 0)

b = params.get("b", 0)

return {"result": a + b}

async def handle_call_tool(tool_name: str, params: Dict[str, Any]):

if tool_name not in tool_registry:

raise HTTPException(status_code=400, detail="Tool not found")

fn = tool_registry[tool_name]

return await fn(params)

@app.post("/rpc")

async def rpc_endpoint(req: Request):

payload = await req.json()

method = payload.get("method")

params = payload.get("params", {})

id_ = payload.get("id")

if method == "list_tools":

result = list(tool_registry.keys())

elif method == "call_tool":

tn = params.get("tool_name")

tparams = params.get("args", {})

result = await handle_call_tool(tn, tparams)

else:

raise HTTPException(status_code=400, detail="Unsupported method")

response = {

"jsonrpc": "2.0",

"id": id_,

"result": result

}

return response

This minimal server supports list_tools and call_tool. To extend:

-

Add streaming support

-

Add resource & prompt endpoints

-

Add authorization middleware

-

Add session handling

-

Add tool filtering

5. Security, Risks & Pitfalls

Building MCP servers introduces unique risks. Based on academic audits and security research:

5.1 Known Vulnerabilities

-

Tool Poisoning / Tool Misuse: A malicious client or compromised session can invoke unintended tools or chain tools to exfiltrate data.

-

Cross-Server Attacks: In ecosystems where multiple MCP servers are connected, a “Trojan” server might exploit trust relationships and leak data across servers.

-

Malicious Server Behavior: A compromised server might deliver malicious prompts or perform side effects secretly.

-

Token Mis-redemption: Without using resource indicators, access tokens might be misused across servers. The spec now requires resource indicators to scope tokens.

-

Session Hijacking / Identity Fragmentation: Fragmented or poorly managed identities across systems can lead to privilege escalation.

5.2 Best Practices & Mitigations

-

Use cryptographically safe token systems and require resource indicators

-

Validate and sanitize all inputs

-

Enforce least privilege: each client/session gets only required tools

-

Rate-limit and throttle calls

-

Use timeout / cancellation for tool calls

-

Sandbox or isolate tool execution (docker, VM, restricted environment)

-

Monitor logs and anomaly detection

-

Use security audits (e.g. MCPSafetyScanner) to detect vulnerabilities in your server implementation

6. Scaling, Operational Strategies & Maintenance

6.1 Horizontal Scaling

If your server is stateless (no session memory), you can scale multiple instances. Use a load balancer to distribute RPC calls.

6.2 Stateful Session Sharding

For stateful behavior, either:

-

Use a shared session store (Redis, database)

-

Shard sessions by client/user

-

Ensure sticky routing or session affinity

6.3 Failover & Resilience

-

Timeout tool execution, fallback strategies

-

Graceful shutdown, draining new requests

-

Circuit breakers if downstream tool fails

6.4 Versioning & Tool Evolution

-

Maintain backward compatibility for tool APIs

-

Version your tool schemas

-

Support dynamic registration/unregistration of tools

6.5 Observability & Telemetry

Expose metrics (Prometheus) for:

-

RPC method latencies

-

Tool call durations/failures

-

Session count

-

Authorization event counts

Also enable structured logs for incident tracing.

6.6 Maintenance & Upgrades

-

Update tool implementations (hot reload)

-

Migrate schemas carefully

-

Monitor dependency updates for security

7. Use Cases & Example Scenarios

Here are a few agent workflows where your MCP server shines:

-

Agent with document search + QA + action tools

-

MCP server provides tools:

search_docs,open_doc_section,summarize_section,update_record -

Client (agent) lists tools, then picks which to call

-

-

Multi-agent orchestration

-

One MCP server per domain (e.g. CRM, Billing, Analytics)

-

Agent queries across servers

-

-

Prompt-driven dynamic tool selection

-

The client requests

list_prompts()orget_prompt()to adjust agent behavior

-

-

Tool updates / new features rollout

-

Servers push updated tool lists or deprecations via push mode

-

These use cases demonstrate the flexibility of your MCP server as the “brain interface” for agent systems.

8. Next Steps & Advanced Enhancements

Once your base MCP server is working, you can extend:

-

Approval flows: client must request permission before calling sensitive tools

-

Subscription / notifications: push server messages to client (tool list changes, updates)

-

Caching & local agent proxies: reduce latency on repeated

list_tools() -

Connector chaining or “bridge servers” like MCP Bridge (a proxy architecture) that unify multiple MCP servers behind one façade arXiv

-

Graph orchestration layers (LangGraph integration) so agents plan tool sequences

-

Access control UI / dashboards to manage which clients get which tools

9. Conclusion

Developing an MCP Server from scratch is a powerful way to enable agentic AI systems that access external tools and data in a structured, secure way. You now have a blueprint:

-

Understand MCP concepts, primitives, transports

-

Design architectural trade-offs (state vs stateless, streaming, tool filtering)

-

Implement message dispatch, tool registry, security, session handling

-

Add monitoring, scaling, resilience

-

Mitigate known security risks (tool poisoning, cross-server attacks, token misuse)

If you’re building an AI agent or platform and want a robust MCP server backend (for FastAPI, Django, or your custom ecosystem), reach out to us for a quote or consultation. We can architect, build, secure, and scale your MCP infrastructure end-to-end.