Beyond the Frame: How AI Orbit Labs is Redefining Storytelling with Image-to-Video Technology

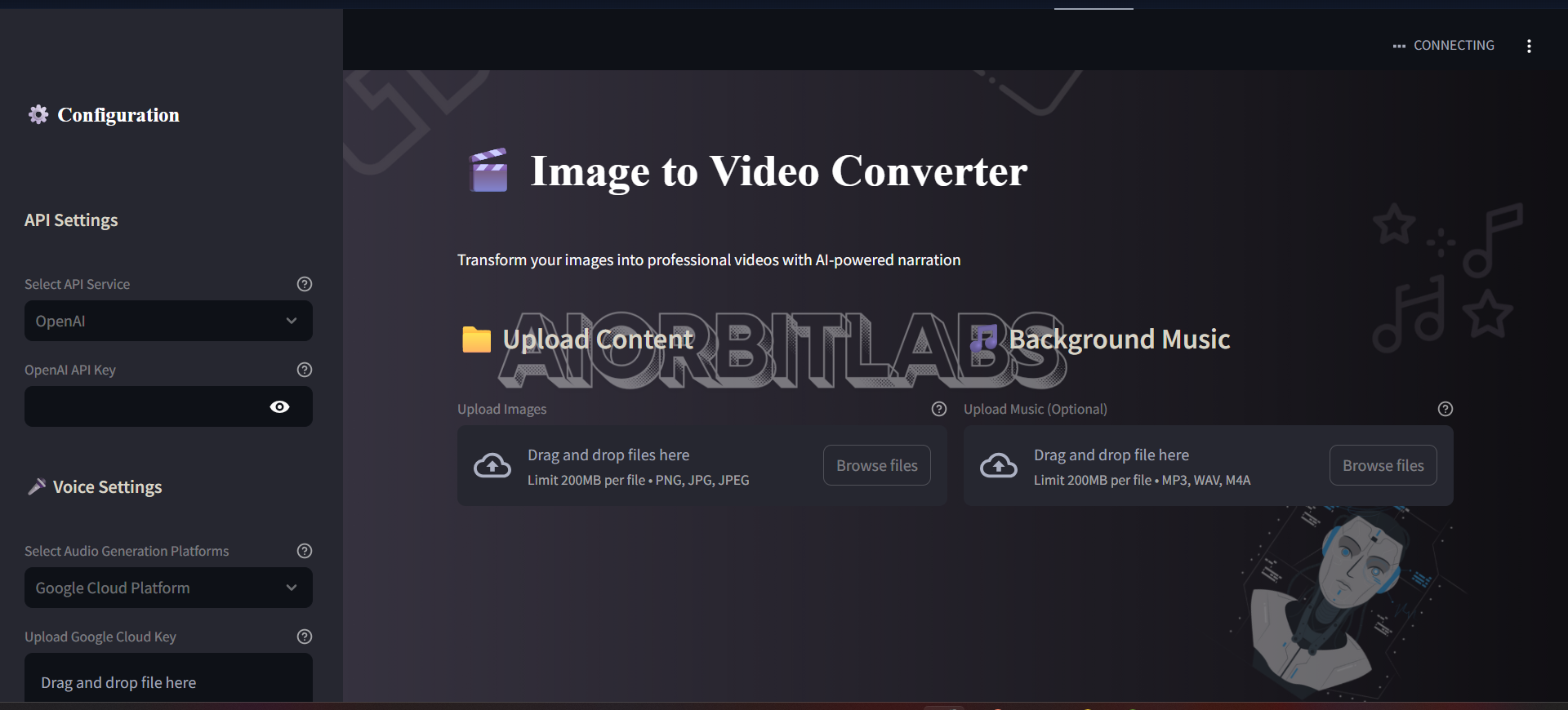

In the fast-evolving world of artificial intelligence, the line between static visuals and dynamic media is disappearing. At AI Orbit Labs, we have always been at the forefront of this shift, exploring how cutting-edge machine learning can breathe life into still images. Our latest project, Image to Video, is not just a tool; it is a gateway to the next era of digital engagement—and it is now live on Hugging Face Spaces.

As we move deeper into 2026, the demand for "intelligent content" has skyrocketed. Passive observation is being replaced by immersive experiences. This comprehensive guide explores why image-to-video technology is essential, the sophisticated tech stack powering our pipeline, and how you can leverage these innovations for marketing, education, and beyond.

1. The Paradigm Shift: Why Image-to-Video Matters in 2026

Static images, while powerful, have inherent limitations in a high-speed digital economy. To capture attention in a world of short-form video and algorithmic feeds, creators must think beyond the single frame.

The Power of Dynamic Engagement

In today’s digital era, audiences demand content that moves. Research indicates that video content generates up to 1200% more shares than text and images combined. By converting a static brand visual into a fluid video, businesses can:

-

Increase Dwell Time: Moving visuals hold the viewer's eye longer, signaling quality to search engine algorithms.

-

Enhance Emotional Resonance: Movement allows for better pacing, transitions, and the building of a narrative arc that a still photo simply cannot achieve.

Democratizing Professional Production

Traditionally, high-quality video required expensive cameras, lighting kits, and professional editing suites. AI Orbit Labs is dismantling these barriers. Our Image to Video project provides:

-

Accessibility: Small-scale creators can now build professional-grade videos without a Hollywood budget.

-

Scalability: For enterprise businesses, the ability to produce hundreds of video assets from a single library of product images is a game-changer for localized marketing and A/B testing.

2. Deep Dive: The Tech Stack Behind the Innovation

The Image to Video pipeline at AI Orbit Labs is a symphony of several powerful dependencies. We didn't just build a wrapper; we integrated a multi-layered system designed for accuracy and performance.

Text Extraction: PaddleOCR & PaddlePaddle

To make a video truly "intelligent," the system must understand what is inside the image. We utilize PaddleOCR (powered by PaddlePaddle) for industry-leading text extraction.

-

Precision: PaddleOCR handles complex layouts, multi-column text, and even handwritten notes with high fidelity.

-

Contextual Awareness: By extracting text, our pipeline can automatically generate relevant overlays or inform the AI voiceover about the image's specific content.

Video Synthesis: OpenCV & MoviePy

The "movement" in our videos is handled by a combination of OpenCV and MoviePy.

-

OpenCV provides the heavy lifting for image processing and frame-by-frame manipulation.

-

MoviePy acts as our digital editor, stitching sequences together, managing transitions, and syncing audio tracks to the visual timeline.

AI Narrations: Google Cloud TTS & ElevenLabs

A video is only as good as its sound. To ensure professional narration, we’ve integrated:

-

Google Cloud Text-to-Speech (TTS): For reliable, high-speed, and multilingual voice generation.

-

ElevenLabs: For those looking for high-emotion, "human-like" voice clones that add a layer of authenticity to storytelling.

User Interface: Streamlit

We believe that advanced AI should be easy to use. Streamlit allowed us to build an interactive, browser-based interface that hides the complexity of the backend code, letting users focus on creativity rather than terminal commands.

3. Real-World Use Cases: Where AI Video Shines

The applications for image-to-video AI are limited only by the imagination. At AI Orbit Labs, we see four key sectors where this technology is becoming indispensable:

A. Digital Marketing & E-Commerce

Convert your standard product shots into high-converting social media ads. Instead of a flat photo of a product, imagine a 10-second clip where the product glimmers under shifting light, accompanied by an AI-generated voiceover highlighting its key features.

B. E-Learning & Corporate Training

Animate educational material for better comprehension. According to 2026 e-learning trends, interactive video increases knowledge retention by $25\%$ compared to text-based modules. Educators can now turn infographics into narrated "explainer" videos in seconds.

C. Creative Storytelling & Personal Archiving

Bring photo albums or digital illustrations to life. For authors and artists, this tool can create "book trailers" or animated concept art that helps pitch ideas to publishers and studios.

D. Accessibility & Inclusion

One of our most proud applications is using AI to create narrated video versions of complex infographics. For users with visual impairments, a narrated video that describes and explains an image provides a much more inclusive experience than simple alt-text.

4. Why We Chose Hugging Face Spaces

Deployment is just as important as development. By hosting our Image to Video project on Hugging Face Spaces, we ensure several key benefits for our community:

-

Frictionless Experience: No complex Python environments or GPU setups are required. Users just click and use.

-

Community-Driven Innovation: Hugging Face is the heart of the global AI community. Having our tool live there allows for instant feedback from other developers, which fuels our iterative improvement process.

-

Foundation for Growth: Spaces provides a scalable environment that allows us to test new models (like Sora or Kling integrations) before migrating them to larger cloud architectures.

5. The Roadmap Ahead: What’s Next for AI Orbit Labs?

Our Image to Video project is just the first chapter. We are currently working on integrating this tool into our broader AI Ecosystem, which includes:

-

Real-Time Customization: Adding a "Director's Mode" where users can choose specific transitions and cinematic camera movements (dolly, zoom, pan).

-

Multilingual Expansion: Leveraging our work with multilingual AI voice agents to offer instant translation and dubbing for every video generated.

-

SmartOps & RAG Integration: Integrating with systems like our ILMA University Chatbot to allow students to "ask" for a video summary of a lecture slide.

-

AI SEO Optimization: Automatically generating keyword-rich video descriptions and metadata to help your generated content rank faster on YouTube and Google.

Final Thoughts: The Future is Intelligent

The future of storytelling is no longer static—it is intelligent, adaptive, and powered by AI. By transforming still images into engaging videos, we are not just changing a file format; we are changing how humans communicate and consume information.

At AI Orbit Labs, we remain committed to making these powerful tools practical for real-world applications. Whether you are interested in LLM optimization, security automation, or the financial side of AI through projects like AI Cash Orbit, we invite you to join our journey.