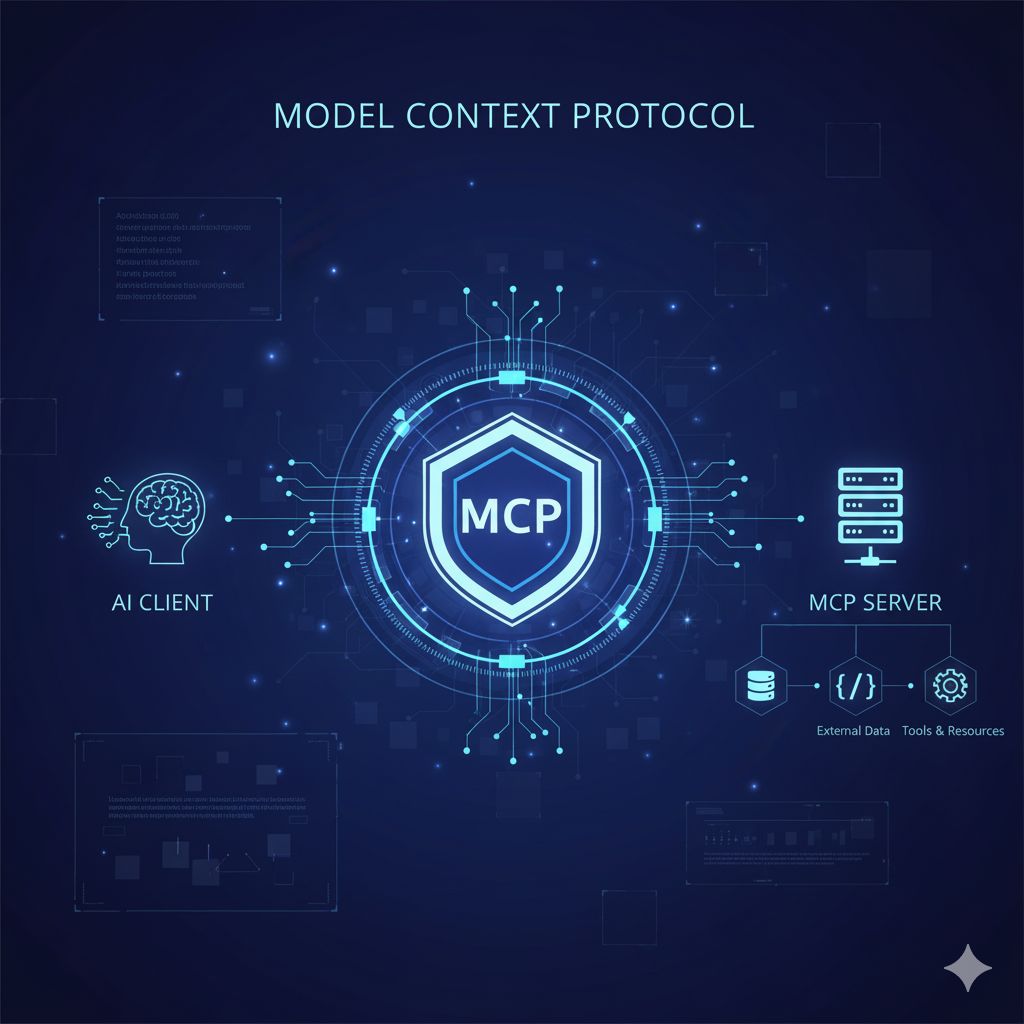

1. What Is MCP (Model Context Protocol)?

-

The Model Context Protocol (MCP), introduced by Anthropic in November 2024, is an open standard for connecting LLMs (or AI agents) to external tools, data sources, and environments in a consistent, standardized way.

-

It aims to replace the proliferation of bespoke integrations between models and APIs, by giving a unified interface (RPC-style) for tool invocation, data access, prompt templates, subscriptions, etc.

-

In short: the MCP client (the AI or agent) can talk to one or more MCP servers, which offer capabilities (tools, resources, prompts) that the model can dynamically call.

Because MCP is open and protocol-based, different organizations can host MCP servers for their own domains (e.g., internal tools, data, services) and the model can choose which server to call depending on context.

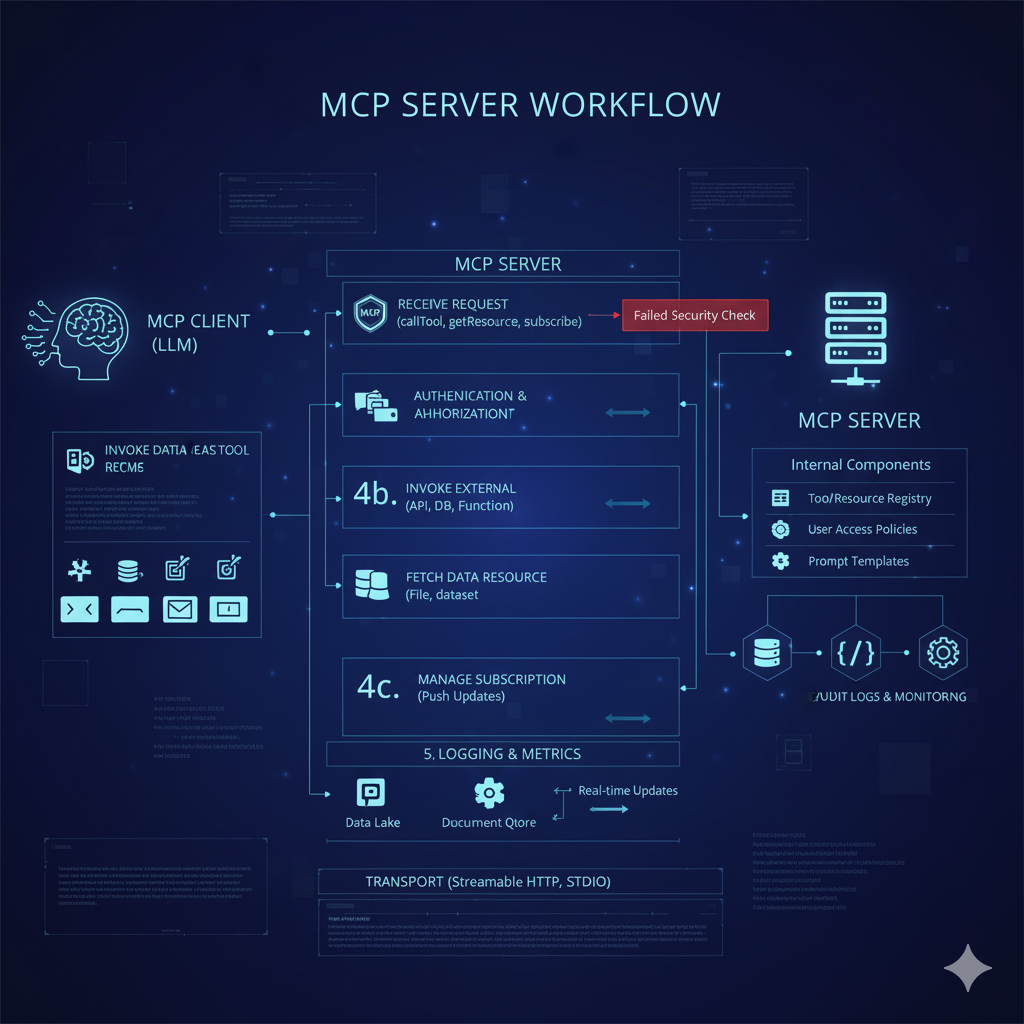

2. The Role of an MCP Server

An MCP Server is the backend component in the MCP ecosystem. It:

-

Exposes tools and resources (APIs, data, functions) under the MCP protocol

-

Handles requests from MCP clients (LLMs) to invoke those tools

-

Performs routing, security checks, authorizations, and state tracking (if stateful)

-

Can push updates/notifications to clients (depending on transport)

-

May manage user settings, tool lists, prompt templates, etc.

In effect, the MCP server is a gateway that gives the AI model controlled, audit-able access to external capabilities, without embedding custom logic into the LLM itself.

3. Core Concepts & Capabilities

According to Hugging Face’s documentation and MCP courses, MCP servers typically offer capabilities in these categories:

| Capability | Description |

|---|---|

| Tools | Functions or actions the model can call (e.g. HTTP requests, calculations, database queries) |

| Resources | Data assets the model can query (files, datasets, documents) |

| Prompts | Predefined prompt templates or instructions that can be reused |

| Subscriptions / Notifications | Ability for server to push updates (tool list changes, resource updates) |

A model may decide, based on context or reasoning, to call a tool, fetch a resource, or prompt the server for additional info.

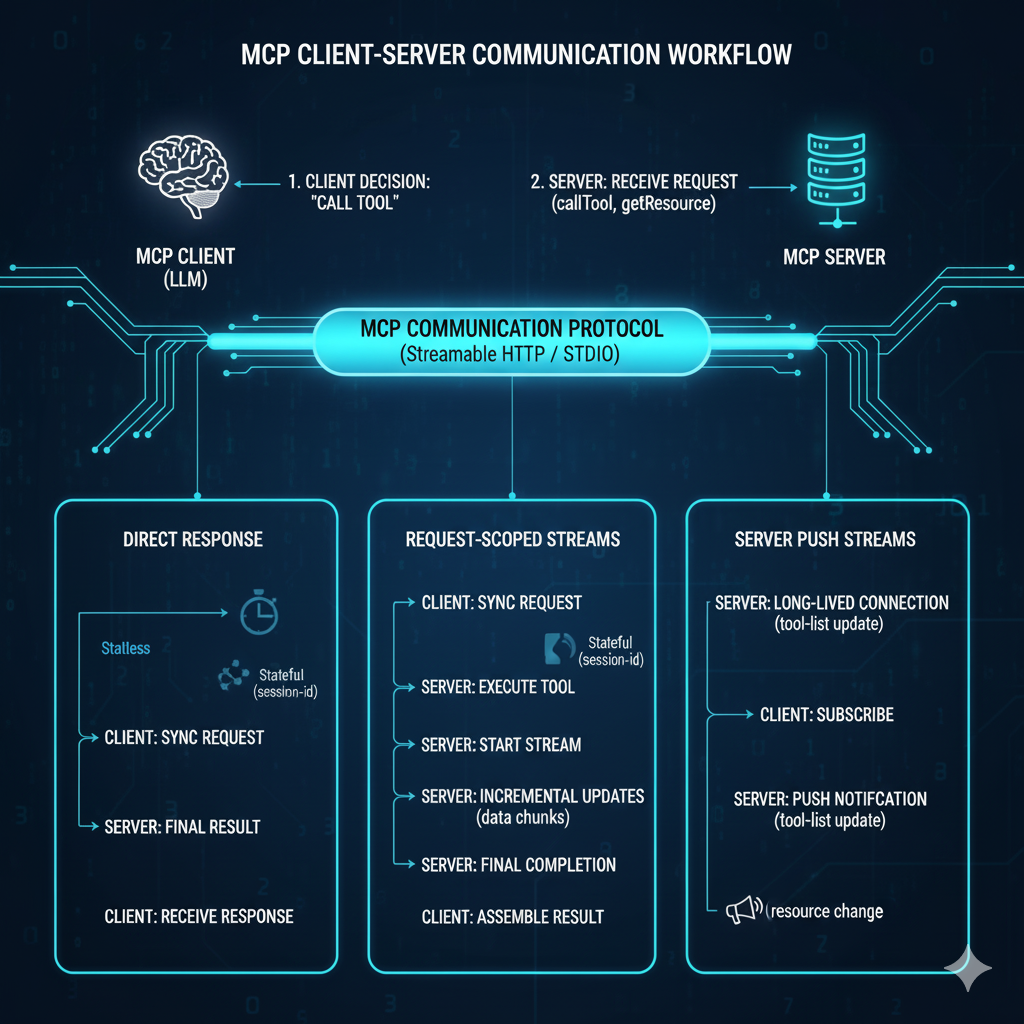

4. Transport / Communication Modes

One of the trickiest designs in MCP is how the client and server talk to each other. The protocol supports multiple transport modes. The Hugging Face MCP Server supports several.

Here are the main transport modes:

| Transport Mode | Use Case | Bidirectional? | Notes |

|---|---|---|---|

| STDIO | Local client + server on same machine | Yes | Useful for embedding; local development mode. |

| HTTP with SSE | Remote connection over HTTP / Server Sent Events | Yes | Previously standard, but deprecated/being replaced. |

| Streamable HTTP | Modern HTTP transport supporting streaming and push | Yes | More flexible, replacing SSE. |

Streamable HTTP details

-

Streamable HTTP allows both request/response and push-style interactions.

-

MCP supports three interaction patterns under Streamable HTTP: Direct Response, Request-Scoped Streams, and Server Push Streams.

-

Direct Response is simple request/response (no streaming) — good for stateless, synchronous tasks.

-

Request-Scoped Streams allow the server to send incremental updates or progress (e.g. a long-running tool call) tied to a particular request.

-

Server Push Streams permit the server to push unsolicited messages (e.g. tool-list updates) to the client over a long-lived stream.

Choosing which mode depends on whether your tools are long-running or whether you need server-initiated signals.

5. State vs Stateless Design

MCP servers can be stateless or stateful:

-

Stateless: Each request is independent. No session IDs. Easier to scale horizontally.

-

Stateful: Server maintains session context (via

mcp-session-id) so it can correlate follow-up streams, elicitations, sampling, or multi-step dialogues.

If your tools require multi-step interaction or server-initiated messages (server push), stateful design may be necessary. But stateful servers introduce complexity (session affinity, shared memory, resumption logic).

6. The Hugging Face MCP Server: A Case Study

Hugging Face has built and open-sourced their MCP Server, which provides a robust example of how a real MCP server works.

Key Design Choices

-

They support STDIO, SSE, and Streamable HTTP transports.

-

Their production deployment uses streamable HTTP with stateless direct responses, because for many use cases, tool calls are synchronous and don’t require streaming.

-

They manage tool access per user: authenticated users can get custom tool lists; anonymous users get default tools.

-

The server dynamically updates client tool lists and supports tool-list change notifications.

Spaces as Tools via MCP

Hugging Face allows exposing Spaces (Gradio apps) as MCP tools. If a Space has an MCP badge, it becomes callable from MCP clients.

This means an AI agent can “use” a Hugging Face Space (e.g. an image generation or summarization app) as a tool, via MCP, without custom API wiring.

7. Security & Risks

With great power comes great risk. MCP introduces several security challenges:

Known Risks & Academic Findings

-

A recent safety audit shows potential vulnerabilities: malicious MCP servers could exploit tool invocation to execute harmful code, leak credentials, or perform remote access attacks.

-

A new attack called MPMA (Preference Manipulation Attack) enables a malicious MCP server to manipulate LLMs to prefer that server, perhaps for monetization.

Mitigations & Best Practices

-

Tool permission restrictions: Limit which tools are callable by whom.

-

Approval/consent flows: Ask user permission before executing side-effect tools.

-

Sandboxing: Run tool execution in isolated sandboxes (containers, restricted environments).

-

Input validation / sanitization: Ensure that tool arguments are safe.

-

Session/timeouts / rate limits: Protect against misuse or replay attacks.

-

Security audits / scanning: Use tools like MCPSafetyScanner.

Being mindful of these is essential, especially in production environments or when your MCP server handles sensitive data.

8. How to Build or Deploy an MCP Server

Basic Steps

-

Define Tool Interfaces

Decide which tools your server will expose (HTTP calls, DB queries, file access, etc.). Specify input/output schemas. -

Select Transport Mode(s)

For low-latency synchronous use, Direct Response over Streamable HTTP is often enough. For streaming or push, enable Request-Scoped or Server-Push. -

Session Strategy

Choose stateless or stateful based on whether your tools require multi-step interactions. -

Authorization & Access Control

Integrate authentication (e.g. API tokens, OAuth), limit per-user tool sets, and enforce security policies. -

Implement the Protocol

Use MCP SDKs (Python, TypeScript etc.) to handle MCP messages (initialize, callTool, notification, etc.). -

Observability & Logging

Track requests, errors, tool usage metrics, client connections. -

Deployment & Scaling

Deploy behind load balancers (if stateless), shard stateful servers, manage reconnections and resumption logic.

Available Implementations

-

shreyaskarnik / huggingface-mcp-server: A read-only server exposing Hugging Face Hub APIs (models, datasets, spaces).

-

evalstate / mcp-hfspace: Lightweight MCP server connecting to Spaces; supports STDIO, SSE, streamable HTTP.

-

Hugging Face’s official MCP Server: supports dynamic tools, streaming, direct response, and observability dashboards.

You can adapt these or build your custom MCP server depending on your domain (enterprise, chatbot, knowledge base, etc.).

9. Use Cases & Benefits

Use Cases

-

Agents that browse models/datasets on Hugging Face via MCP server.

-

AI assistants that call external APIs (e.g. weather, calendar) via MCP tools.

-

Interactive systems where the model can ask follow-up questions (elicitation) via streaming.

-

Tool chains or orchestrations: model picks tools to call based on user request.

Benefits

-

Standardized integration: No custom glue code for every model-to-tool connection.

-

Dynamic tool management: Tool lists or capabilities can change without re-deploying the client.

-

Separation of concerns: The LLM doesn’t need internal logic for every API — it uses MCP server.

-

Extensibility: You can add new tools or data resources at server-side, clients automatically gain access.

In fact, Hugging Face has already integrated MCP support into the Hub: you can connect your MCP client to the Hub and use curated tools (Spaces, model and dataset exploration) via one URL.

10. Conclusion & Call to Action

The MCP Server is a key piece in the next generation of AI agents: it abstracts tool access, standardizes integration, and lets LLMs dynamically call external services in a controlled protocol.

If you're building AI agents, you’ll likely need an MCP server to manage tools, resources, authentication, and streaming. Want help designing or deploying one (for FastAPI, Django, or custom contexts) in europe/belgium? Contact us for a tailored quote and consultation—let’s build your MCP-powered agent infrastructure together.