Introduction: Why LLM Optimization Is Critical in Europe

Europe’s AI ecosystem is evolving rapidly. From the AI research hubs in Brussels and Ghent to pan-European innovation centers in Germany and France, optimizing Large Language Models (LLMs) is at the core of building cost-efficient, GDPR-compliant, and high-performance AI systems.

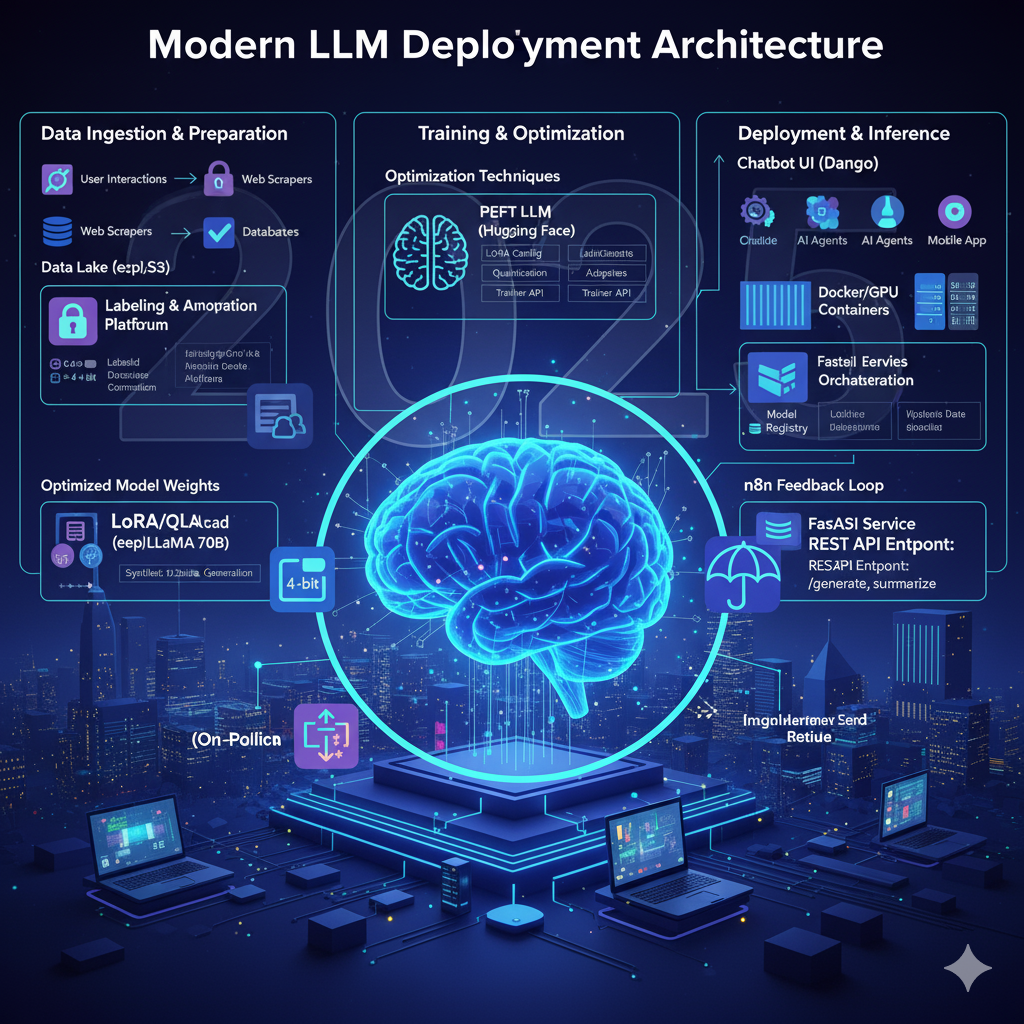

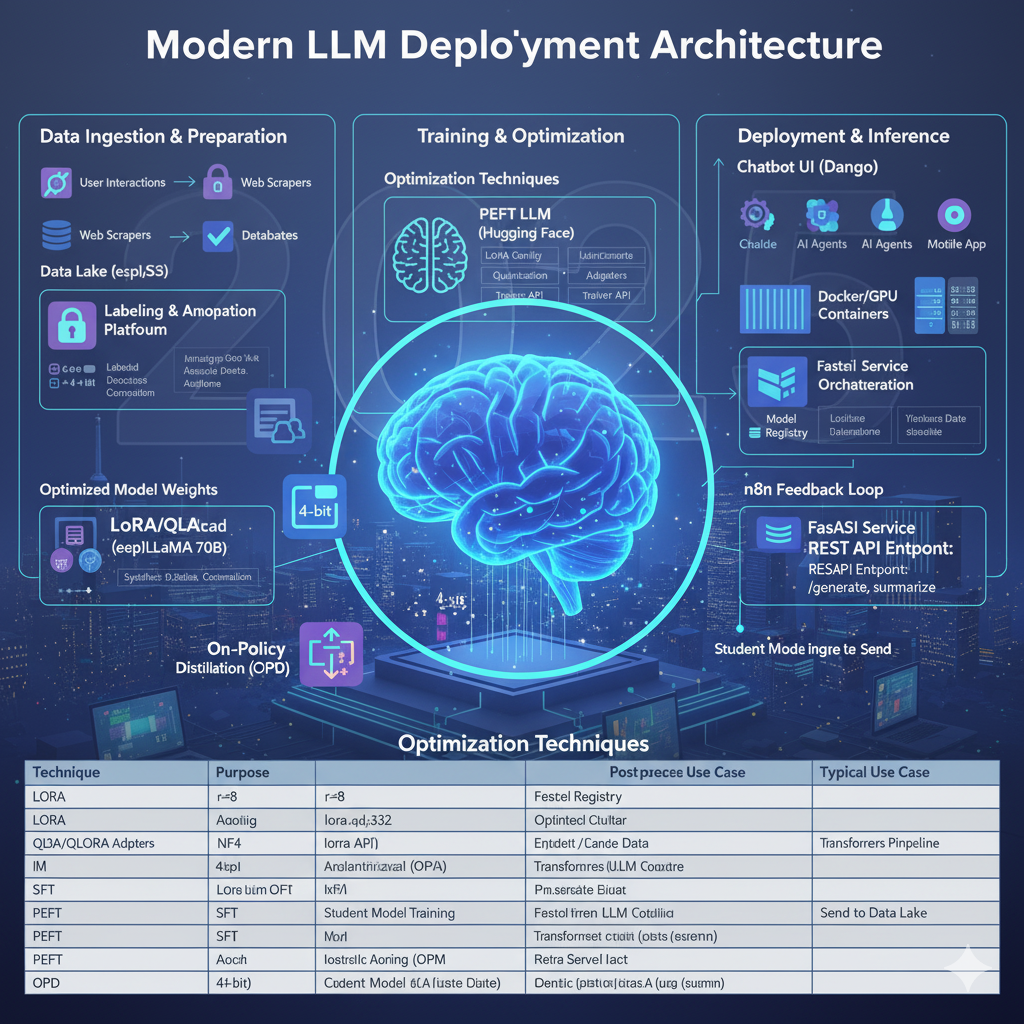

With the European Union’s AI Act setting new standards for transparency and accountability, developers across Belgium are embracing techniques like LoRA, QLoRA, SFT, PEFT, and OPD to fine-tune and deploy LLMs in local data environments without compromising compliance.

In this guide, we’ll explore how European AI engineers can efficiently fine-tune, compress, and deploy models using open-source frameworks such as Hugging Face Transformers, FastAPI, and Django, leveraging GPU clusters, and cloud platforms like OVHcloud, Hetzner, and Scaleway — all within EU data jurisdictions.

1. The European Context of LLM Optimization

LLMs such as LLaMA-3, Mistral, and Falcon are driving innovation in multilingual and domain-specific applications — from Dutch and French chatbots in Belgium to AI-powered document summarizers for EU agencies.

However, fine-tuning and deploying these large models at scale remains resource-intensive. European developers face three major challenges:

-

Data sovereignty — ensuring all data processing remains within EU boundaries.

-

Hardware constraints — limited access to high-end GPUs.

-

Energy efficiency — aligning with EU sustainability standards.

Optimization techniques like LoRA and QLoRA directly address these issues by reducing training costs and hardware dependency, enabling AI startups in Belgium to innovate competitively against global players.

2. LoRA (Low-Rank Adaptation)

Concept Overview

LoRA (Low-Rank Adaptation) introduces a parameter-efficient fine-tuning mechanism. Instead of retraining the entire model, LoRA inserts low-rank matrices (A and B) into existing weight layers, updating only a small subset of parameters.

This drastically reduces VRAM usage and computation costs — essential for European labs that rely on shared GPU clusters or on-premises compute resources.

How LoRA Works

The core update equation:

W' = W + A * B

Here, W is the frozen pre-trained weight, while A and B are lightweight matrices optimized during training.

Advantages for EU Developers

✅ Train large models locally (EU data residency)

✅ Minimize carbon footprint — lower energy consumption

✅ Integrate easily with Hugging Face’s peft library

Implementation Example

from transformers import AutoModelForCausalLM

from peft import LoraConfig, get_peft_model

model = AutoModelForCausalLM.from_pretrained("meta-llama/Llama-2-7b-hf")

config = LoraConfig(r=8, lora_alpha=32, target_modules=["q_proj", "v_proj"])

model = get_peft_model(model, config)

LoRA’s lightweight design allows teams in Brussels, Ghent, and Leuven to fine-tune models without relying on large cloud GPUs.

3. QLoRA (Quantized LoRA)

Why Quantization Matters

Quantization compresses model weights from 16-bit floating-point precision to 4-bit integers. QLoRA combines quantization with LoRA’s efficiency, allowing 13B+ parameter models to train on single-GPU European workstations.

This is especially beneficial for academic institutions and small AI consultancies in Belgium working under limited budgets.

Setup Example

from transformers import AutoModelForCausalLM, BitsAndBytesConfig

bnb_config = BitsAndBytesConfig(load_in_4bit=True)

model = AutoModelForCausalLM.from_pretrained(

"meta-llama/Llama-2-13b", quantization_config=bnb_config

)

Benefits in EU Context

✅ Enables low-resource fine-tuning within EU data centers

✅ Reduces cloud costs under GDPR-compliant infrastructure

✅ Compatible with Hugging Face’s inference APIs

Many Belgian teams integrate QLoRA with FastAPI inference endpoints to deliver efficient AI agents to industries like fintech, logistics, and education.

4. SFT (Supervised Fine-Tuning)

Overview

Supervised Fine-Tuning (SFT) involves training LLMs on labeled datasets to specialize them for targeted tasks — a common approach in EU-funded research programs focusing on legal, healthcare, and multilingual domains.

Example training structure:

from transformers import Trainer, TrainingArguments

training_args = TrainingArguments(

output_dir="./results",

per_device_train_batch_size=2,

num_train_epochs=3,

)

trainer = Trainer(model=model, args=training_args, train_dataset=train_data)

trainer.train()

In Belgium, SFT has been applied in:

-

Dutch-French translation assistants

-

Legal document summarization

-

Multilingual university chatbots

5. PEFT (Parameter-Efficient Fine-Tuning)

Definition

PEFT provides a unified framework for efficient LLM tuning methods, including LoRA, Prefix Tuning, and Prompt Tuning — all crucial for reducing compute overhead.

European developers often rely on PEFT to scale models without breaching EU data compliance regulations.

Example

from peft import get_peft_model, PeftConfig

config = PeftConfig(task_type="CAUSAL_LM", peft_type="LORA")

model = get_peft_model(model, config)

By deploying PEFT in Hugging Face Spaces or private Django APIs, teams can maintain performance while minimizing environmental impact.

6. OPD (On-Policy Distillation)

What Is OPD?

OPD trains a smaller student model using teacher-generated data. This knowledge distillation reduces model size without major accuracy loss — critical for AI edge deployments in Europe.

European Advantage

✅ Aligns with the EU’s sustainability goals

✅ Reduces reliance on U.S.-based API services

✅ Enables AI deployment on on-premise or sovereign EU cloud platforms

Example:

# Pseudocode

teacher_output = teacher.generate(prompt)

student.train_on(teacher_output)

7. Combining Techniques in European AI Workflows

| Technique | Core Function | EU Use Case |

|---|---|---|

| LoRA | Efficient fine-tuning | On-prem model adaptation |

| QLoRA | Quantized training | Low-resource labs |

| SFT | Domain-specific learning | Legal/medical AI |

| PEFT | Modular efficiency | Multi-agent systems |

| OPD | Knowledge distillation | Edge AI in IoT |

8. Deploying Optimized LLMs in Django & FastAPI

European teams commonly use:

-

FastAPI → lightweight, async inference

-

Django → enterprise-grade REST and dashboards

-

n8n → automation workflows

Example FastAPI endpoint:

from fastapi import FastAPI

from transformers import pipeline

app = FastAPI()

summarizer = pipeline("summarization", model="facebook/bart-large-cnn")

@app.post("/summarize")

def summarize_text(text: str):

return summarizer(text, max_length=80)[0]['summary_text']

For EU compliance, store inference logs in GDPR-safe databases (PostgreSQL) and host models on EU-based infrastructure.

👉 Learn more: Hugging Face Image-to-Video Model Integration in FastAPI and Django

9. European Real-World Applications

-

Smart City Assistants (Brussels, Antwerp)

-

AI-powered HR Systems

-

Smart Home Automation

-

Retail and E-commerce Agents

-

Academic Chatbots for Multilingual Campuses

10. The Future of LLM Optimization in Europe

The next wave of AI optimization aligns closely with Europe’s digital priorities:

-

Dynamic quantization for energy-efficient inference

-

Federated fine-tuning preserving user data privacy

-

EU AI sandbox projects driving open collaboration

-

Sustainable AI computing clusters (Green Data Centers Belgium)

As Belgium continues to emerge as a key AI hub — connecting research institutions and industry — techniques like LoRA and PEFT will define how European developers balance innovation, efficiency, and ethical AI.

Conclusion

Optimizing Large Language Models (LLMs) with LoRA, QLoRA, SFT, PEFT, and OPD allows European developers and organizations to build efficient, secure, and sustainable AI systems that comply with EU AI Act and GDPR requirements.

From multilingual chatbots to real-time enterprise agents, these optimization strategies empower teams in Belgium and across Europe to fine-tune world-class AI models on local infrastructure — cost-effectively and responsibly.

If you’re looking to develop or integrate an AI-powered application using technologies like Hugging Face, FastAPI, Django, or LangGraph, we can help you design and deploy fully optimized, production-ready solutions tailored to your business or research needs.

👉 Contact us today for a personalized AI project consultation or quote — and let’s build your next-generation intelligent system together.